Are you considering purchasing or upgrading a server and deciding between a new GPU vs CPU? Are you looking to examine the differences between GPU vs CPU in terms of speed and performance? Finally, are you wondering — “Do you need a graphics card for a server?”

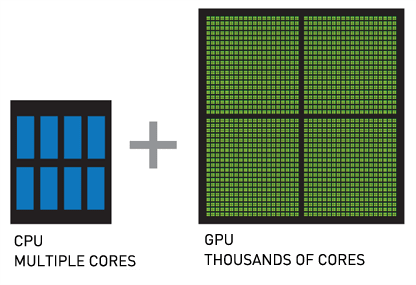

More and more web application managers are considering adding graphics cards into their servers. And the numbers don’t lie; CPU has a high cost per core, and GPU has a low cost per core. For about the same investment, you could have a dozen or two additional CPU cores or a few thousand GPU cores. That’s the power of GPUs, or Graphics Processing Units.

In fact, since June 2018, more of the new processing power from the top 500 supercomputers around the world comes from GPU vs CPU. And companies like Microsoft, Facebook, Google, and Baidu are already using this technology to do more.

What is the Difference Between GPU vs CPU?

Here are the exact differences between a CPU processor vs GPU graphics card:

Graphics Processing Unit (GPU)

A Graphics Processing Unit (GPU) is a type of processor chip specially designed for use on a graphics card. GPUs that are not used specifically for drawing on a computer screen, such as those in a server, are sometimes called General Purpose GPUs (GPGPU).

The clock speed of a GPU may be lower than modern CPUs (normally in the range of 500-800 MHz), but the number of cores on each chip is much denser. This is one of the most distinct differences between a graphics card vs CPU. This allows a GPU to perform a lot of basic tasks at the same time.

In its intended original purpose, this has meant calculating the position of hundreds of thousands of polygons simultaneously and determining reflections to quickly render a single shaded image for, say, a video game.

A basic graphics card might have 700-1,000 processing cores, while modern powerful cards might have 3,000 processor cores or more.

Additionally, core speed on graphic cards is steadily increasing, but generally lower in terms of GPU vs CPU performance, with the latest cards having around 1.2GHz per core.

Central Processing Unit (CPU)

A Central Processing Unit (CPU) is the brain of any computer or server. Any dedicated server will come with a physical CPU (sometimes two or four) to perform the basic processing of the operating system. Cloud VPS servers have virtual cores allocated from a physical chip.

Historically, if you have a task that requires a lot of processing power, you add more CPU power vs adding a graphics card and allocate more processor clock cycles to the tasks that need to happen faster.

Many basic servers come with two to eight cores, and some powerful servers have 32, 64 or even more processing cores. In terms of GPU vs CPU speed, CPU cores have a higher clock speed, usually in the range of 2-4 GHz. CPU clock speed is a fundamental difference that needs to be considered when comparing a processor vs a graphics card.

Why Not Run the Whole Operating System on the GPU?

There are some restrictions when it comes to using a graphics card vs CPU. One of the major restrictions is that all the cores in a GPU are designed to only process the same operation at once (this is referred to as SIMD: Single Instruction, Multiple Data).

So, if you are making 1,000 individual similar calculations, like cracking a password hash, a GPU can work great by executing each one as a thread on its own core with the same instructions.

However, using the graphics card vs CPU for kernel operations (like writing files to a disk, opening new index pointers, or controlling system states) would be much slower.

GPUs have more operational latency because of their lower speed, and the fact that there is more ‘computer’ between them and the memory compared to the CPU. The transport and reaction times of the CPU are lower (better) since it is designed to be fast for single instructions.

By comparison to latency, GPUs are tuned for greater bandwidth, which is another reason they are suited for massive parallel processing. In terms of GPU vs CPU performance, graphics cards weren’t designed to perform the quick individual calculations that CPUs are capable of. So, if you were generating a single password hash instead of cracking one, then the CPU will likely perform best.

GPU vs CPU: Can They Work Together?

There isn’t exactly a switch on your system you can turn on to have, for instance, 10% of all computation go to the graphics card. In parallel processing situations, where commands could potentially be offloaded to the GPU for calculation, the instructions to do so must be hard-coded into the program that needs the work performed.

Luckily, graphics card manufacturers like NVidia and open source developers provide free libraries for use in common coding languages like C++ or Python that developers can use to have their applications leverage GPU processing where it is available.

What are Some of the Applications Where a GPU Might Be Better?

For those now wondering — “Do you need a graphics card for a server?” — it all depends. Your server doesn’t have a monitor. But graphics cards can be applied toward tasks other than drawing on a screen.

The application of GPUs in supercomputers is for any intense general purpose mathematical processing, but the research is usually scientific in nature:

- Protein chain folding and element modeling.

- Climate simulations, such as seismic processing or hurricane predictions.

- Plasma physics.

- Structural analysis.

- Deep machine learning for artificial intelligence (AI).

One of the more famous uses for graphics cards vs CPU is mining for cryptocurrencies, like Bitcoin.

This essentially performs a lot of floating point operations to decrypt a block of pending transactions. The first machine to find the correct solution, verified by other miners, gets bitcoins (but only after the list of transactions has grown a certain amount). Graphics cards are perfect for performing a lot of floating point operations per second (FLOPS), which is what is required for effective mining.

What about Applications for Commodity Servers?

It’s difficult to do many of these things with a single dedicated web server. There are certainly more plug-and-play applications. GPUs, as we saw earlier, are really great at performing lots of calculations to size, locate, and draw polygons. So, naturally one of the tasks they excel at is generating graphics:

- CAD rendering and fluid dynamics

- 3D modeling and animation.

- Geospatial visualization.

- Video editing and processing.

- Image classification and recognition.

Another sector which has greatly benefited from the trend of GPUs in servers is finance or stock market strategy:

- Portfolio risk analysis.

- Market trending.

- Pricing and valuation.

- Big data exploration.

A host of additional applications exist which you might not consider for use on graphics cards, too:

- Speech to text and voice processing.

- Relational databases and parallel queries.

- End-user deep learning and marketing strategy development.

- Identifying defects in manufactured parts through image recognition.

- Password recovery (hash cracking).

- Medical imaging.

This is just the start of what GPU can do for you! Nvidia publishes a list of applications that have GPU accelerated processing.

Do You Need a Graphics Card for a Server? Are GPUs Included by Default?

Dedicated GPUs do not come on dedicated servers by default, since they are very application-specific, and there’s not much point in getting one if your application can’t make use of it. If you know you have need of one, our hosting advisors are happy to talk with you about your application’s requirements.

[ad_2]

Source link