Not all traffic is created equal. Although you may be seeing a steady flow of visitors to your website, be mindful that a sizable chunk of the World Wide Web is comprised of robotic traffic. But what is bot traffic, and how can it be prevented?

Let’s take a deeper look into how you can stop spammy visitors.

What is Bot Traffic?

In a nutshell, bot traffic is any non-human arrival on your website. The term is typically viewed negatively, but not all bot traffic is bad traffic. There are many misunderstandings surrounding bot traffic, so site owners need to know the variations and how to take preventative measures when necessary, especially with large traffic spikes that can absorb your server resources quickly.

Statistics show that bad bot traffic is growing significantly and is now the most dominant form of non-human traffic. With developments in artificial intelligence and automated services bringing more bots into the online landscape, should website owners do more to take action against this developing trend?

To fully comprehend what bot traffic is, let’s delve into the significance of the various types of automated robots online and the actions they perform.

Bot Traffic on Your Site

The key to understanding bot traffic is to acknowledge that these arrivals on your site can vary greatly, and it’s perfectly normal to have robots crawling your pages. The most common forms of bot traffic are search engine crawlers, SEO tool crawlers, copyright bots, and other innocuous automated traffic that is largely harmless.

However, there are also growing numbers of more malicious bots out there looking to harm your website or business. These bot types typically include spambots, DDoS (Distributed Denial of Service) bots, scrapers, and others intent on using your website for the illegitimate benefit of their programmers.

Despite there being no human involvement in the process, bot arrivals on your pages still count as visits. This means that if you’re not working to limit your spam traffic, these visitors could skew your analytics metrics like your page views, bounce rates, session durations, visitors’ locations, and subsequent conversions.

These inconsistencies in your metrics can be a source of frustration for website owners, and it can be tough to measure the performance of a site that’s flooded with robot activity.

With the potential dangers of allowing robots to fester on your pages, let’s explore some of the most effective ways of limiting the impact of bot traffic on your website and delve into the best approaches towards blocking out the bad bots online.

Stay on The Lookout For Vulnerabilities

To better sift through your site’s traffic quality and nullify any bots that could harm your pages, it’s worth running frequent vulnerability scans to identify any possible holes in your security.

Vulnerability scans can detect and classify system weaknesses within your machines, networks, and communication equipment to anticipate countermeasures’ effectiveness. Scans can be performed by an organization’s internal IT department or an external company, like with Liquid Web’s Vulnerability Scanning tool.

When you hire a managed service provider, you naturally expect that they’ll stay on top of updates and monitor your system regularly. Even if an internal IT department can conduct a vulnerability scan, a service provider has both the time and resources necessary to perform such an intensive process since a vulnerability scan requires a hefty level of bandwidth and can be rather intensive.

Furthermore, when vulnerabilities are detected, staff carry the burden of figuring out how to repair the vulnerability and secure the network accordingly.

Remove Bots From Your Analytics Equation

Many website owners turn to analytics data from platforms like Google Analytics to better manage traffic flow through their sites and gain insights into how to optimize their pages better.

Thus, it can be challenging to detect bot traffic when analyzing data through more rudimentary insights engines because of how robots access and explore your pages. Fortunately, Google has worked on remedying this in its latest release of Google Analytics 4, which automatically works to identify bot traffic and filters them out of the insights available.

While automatic exclusion isn’t a feature on Universal Analytics (a different version of Google Analytics), it’s possible to manually filter out hits from known bots and spiders to ensure that your website decisions aren’t formed by non-human traffic.

Robotic traffic may not always arrive on your site with the intention of doing harm, but it can often lead to inaccuracies in how you interpret the volume of visitors navigating to your pages. This can mean that underperforming pages that are drawing in more bot visitors may never get the changes that they need.

To enable this feature, access the Admin View settings and toggling Exclude all hits from known bots or spiders. By operating as a box to check, it’s easy to simply click and forget about the feature – ensuring that your human and non-human visitors are appropriately filtered. The feature itself works by automatically excluding traffic that’s listed on the Interactive Advertising Bureau’s International Spiders & Bots List. Although this means that some bots that haven’t yet been categorized on the list may still slip through the net, it’s still a great simple solution to add some quality control to your site metrics.

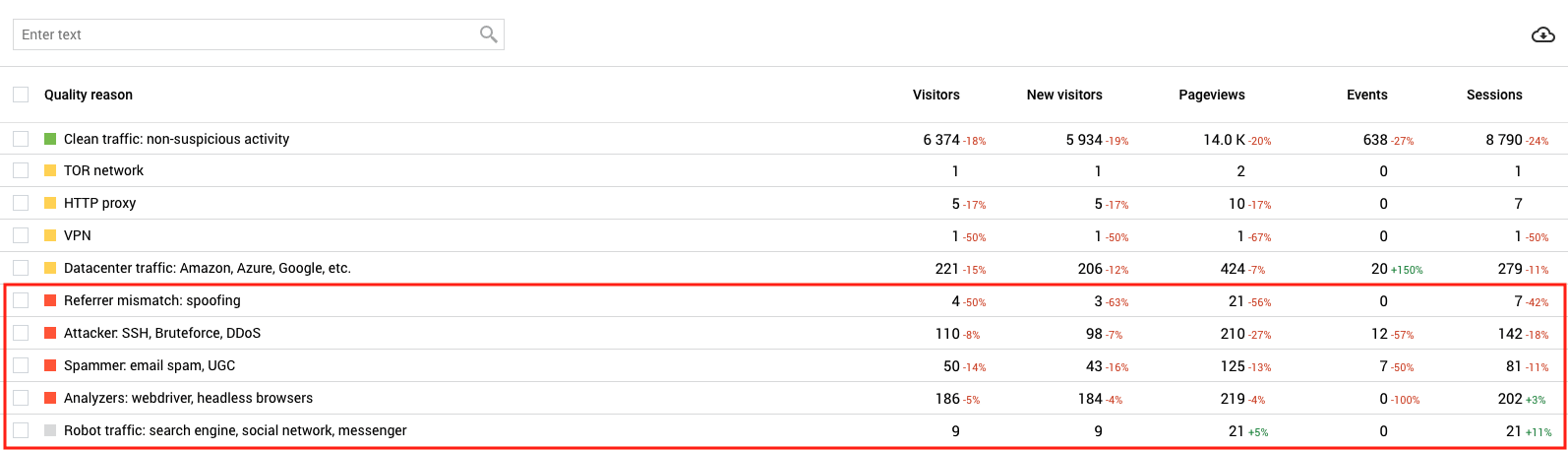

Another excellent analytics tool is Finteza, which can identify bot traffic and categorize them by the type of robot they are (DDoS, spammer, analyzer, or clean traffic). Identifying and categorizing these bots can offer fresh insights into how users with bad intentions target your website.

If your website is continually failing to see results based on the insight-driven changes you make, it could be worth consulting more advanced analytical engines to understand better whether your traffic isn’t all that it seems. Adapting your pages to suit bots unwittingly could amount to a disastrous waste of resources.

Protecting From the Threat of DDoS

DDoS botnets are one of the most hazardous forms of bad bot traffic. A DDoS botnet is a group of Internet-enabled devices infected with malware to enable remote control without the owner’s knowledge. From the hacker’s perspective, botnet devices are interconnected resources used for just about any purpose, but the most harmful is DDoS.

Any single botnet device can be compromised by multiple perpetrators, with each using it for different forms of attack simultaneously. For example, a hacker could order a PC infected with malware to rapidly access a website as part of a more widespread DDoS attack. Meanwhile, the actual owner of the computer could be using it to shop online while wholly unaware of what’s going on.

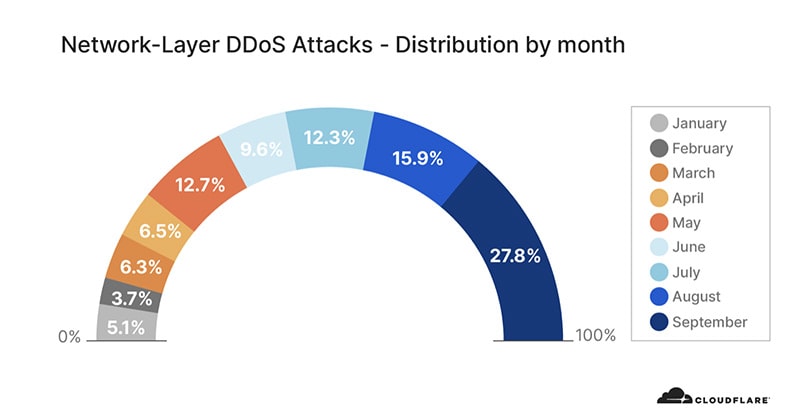

DDoS attacks are rising in prevalence and can bombard a website’s server with requests to render it entirely unreachable, resulting in significant levels of downtime and loss of conversions for the business sites.

Fortunately, it’s possible to find protection online when it comes to protecting against the prospect of DDoS attacks. Liquid Web offers various levels of DDoS protection depending on your protection needs.

Stay Alert to Potential Intruders

While many website owners opt to take on anti-malware and antivirus tools to protect their website, this approach can sometimes come up short in offering protection against unidentified threats that may already be making changes to your system. To fully protect your servers from bad traffic, it’s vital to consider adding more advanced capabilities in the form of an Intrusion Detection System.

Threat Stack can collect, monitor, and analyze security telemetry across server environments to detect anomalies and weigh up threats accordingly.

The protective measures can also help businesses meet security standards, including those required by PCI DSS, SOC 2, and HIPAA/HITECH compliance regulations.

Understanding Bot Traffic to Stay Secure and Compliant

In a world where bad bots are on the rise, businesses must identify bot traffic to understand their metrics better and prevent bots from infiltrating their systems. With the right blend of compliance assistance, it’s possible to stay safe from the disruptive threats and evil intentions of cybercriminals.

Your website should be your pride and joy, and with the proper measures in place, you can ensure that it doesn’t fall into the wrong hands.

[ad_2]

Source link

![Bot Traffic [Good vs Bad Non-Human Traffic]](https://dealzclick.com/wp-content/uploads/2022/02/MacBook-Pro-14-inch-2021-First-Impressions-Everything-Old-is-New.jpg)

![Bot Traffic [Good vs Bad Non-Human Traffic]](https://dealzclick.com/wp-content/uploads/2022/02/Legendary-Enlargement-A-Scientific-Penis-Enlargement-System-to-Add-2-4.jpg)